Abstract

In recent years, remarkable advancements in artificial intelligence-generated content (AIGC) have been achieved in the fields of image synthesis and text generation, generating content comparable to that produced by humans. However, the quality of AI-generated music has not yet reached this standard, primarily due to the challenge of effectively controlling musical emotions and ensuring high-quality outputs. This paper presents a generalized symbolic music generation framework, XMusic, which supports flexible prompts (i.e., images, videos, texts, tags, and humming) to generate emotionally controllable and high-quality symbolic music. XMusic consists of two core components, XProjector and XComposer. XProjector parses the prompts of various modalities into symbolic music elements (i.e., emotions, genres, rhythms and notes) within the projection space to generate matching music. XComposer contains a Generator and a Selector. The Generator generates emotionally controllable and melodious music based on our innovative symbolic music representation, whereas the Selector identifies high-quality symbolic music by constructing a multi-task learning scheme involving quality assessment, emotion recognition, and genre recognition tasks. In addition, we build XMIDI, a large-scale symbolic music dataset that contains 108,023 MIDI files annotated with precise emotion and genre labels. Objective and subjective evaluations show that XMusic significantly outperforms the current state-of-the-art methods with impressive music quality. Our XMusic has been awarded as one of the nine Highlights of Collectibles at WAIC 2023.

Overview

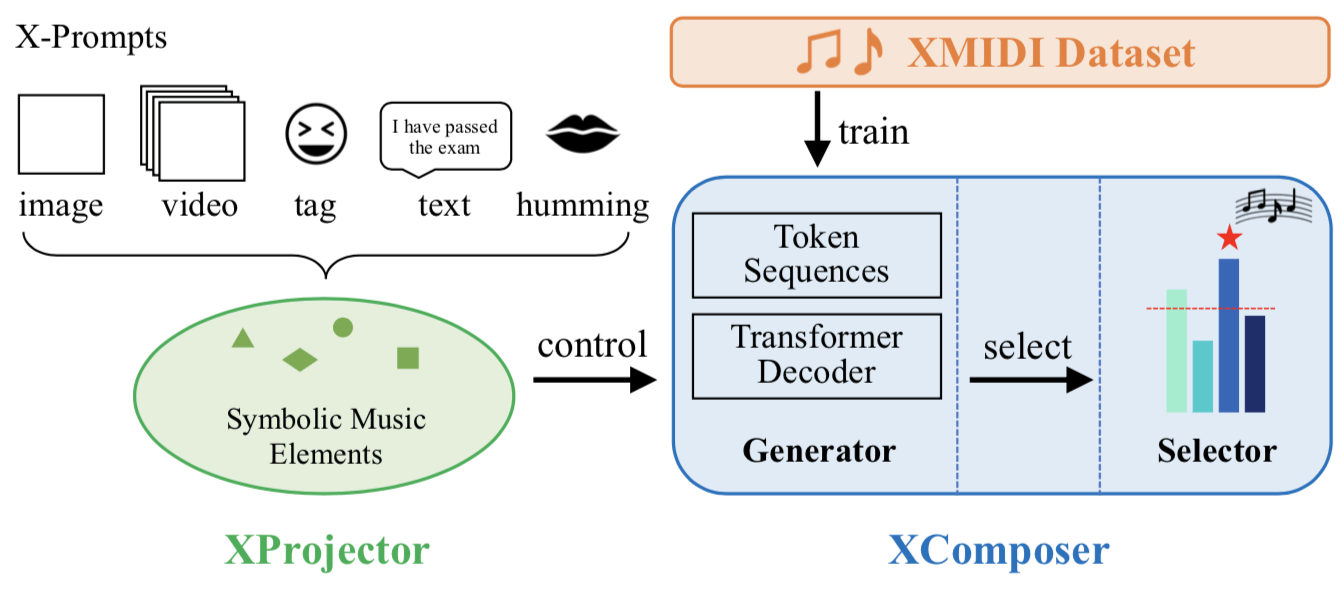

Fig.1: The architectural overview of XMusic.

Our XMusic contains two essential components: XProjector and XComposer. XProjector parses various input prompts into specific symbolic music elements. These elements then serve as control signals, guiding the music generation process within the Generator of XComposer. Additionally, XComposer includes a Selector that evaluates and identifies high-quality generated music. The Generator is trained on our large-scale dataset, XMIDI, which includes precise emotion and genre labels.

XMIDI Dataset

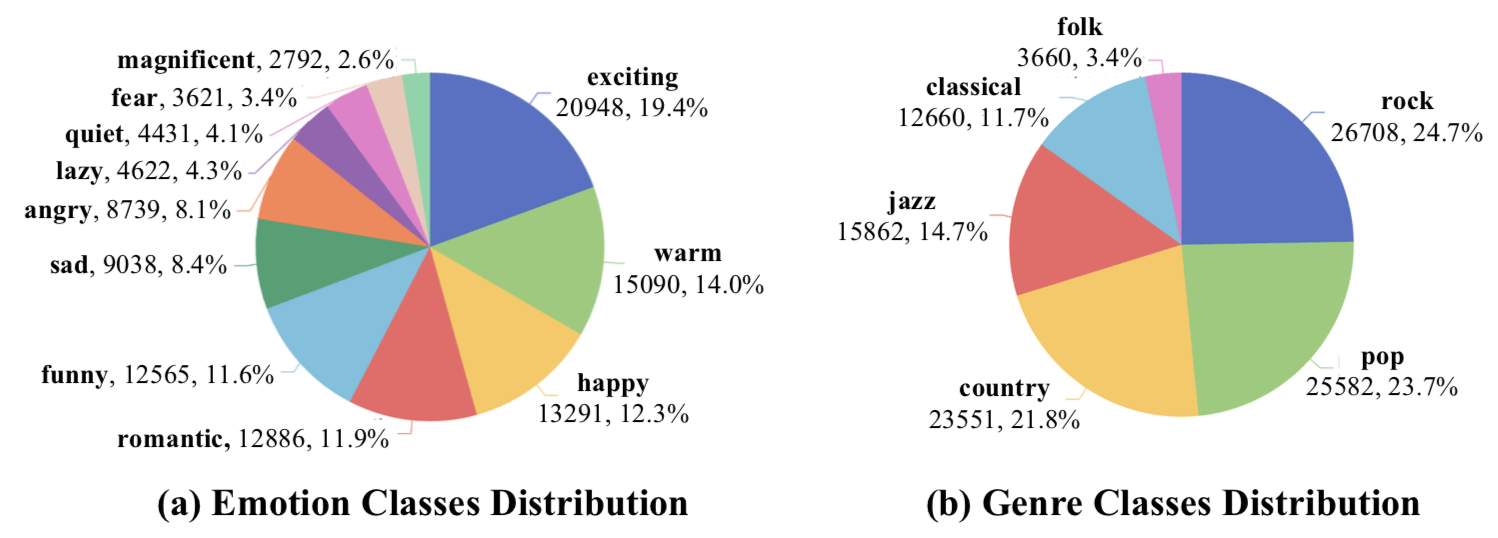

Fig.2: Data statistics of our XMIDI dataset.

Existing publicly available symbolic music datasets suffer from limitations in both scale and label completeness. To address this gap, we built XMIDI, the largest known symbolic music dataset with precise emotion and genre labels, comprising 108,023 MIDI files. The average duration of the music pieces is around 176 seconds, resulting in a total dataset length of around 5,278 hours.

Click here to download the XMIDI dataset.